How do our ear judge the direction of sounds

One of the functions of hearing is to determine the orientation of things. Our ears can give us a rough idea of where sound is coming from.

There are about a million ganglion cells in the retina in each eye, and visually speaking, we have about two million channels of information to tell where things are. But for hearing, the bottleneck is that it has only two channels: the eardrums of the left and right ears. Trying to locate the source of the sound by vibrating sound waves is like placing two devices on the edge of a lake to capture the ripples in the lake, so as to determine how many boats are on the lake and where they are. Its difficulty can be imagined.

Our brains solve this problem in a number of ways. I have written a brief introduction to it before.

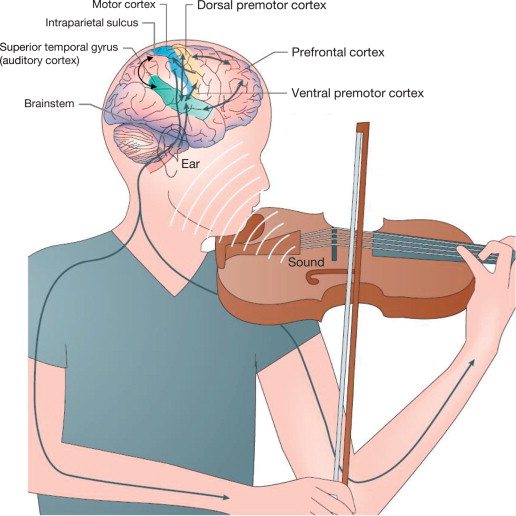

Single sound source localization in the human auditory system.

The ear closest to the sound source can hear the sound first, and the time difference between the sound reaching the two ears can be used to determine the location of the sound source. This type of information is called the binaural time difference.

Ears close to the sound source hear more sound, which is called binaural sound level difference.

This information can be used to locate the sound at the sound level at any time: the low frequency depends on the difference; The sound with this high frequency depends on the sound level difference).

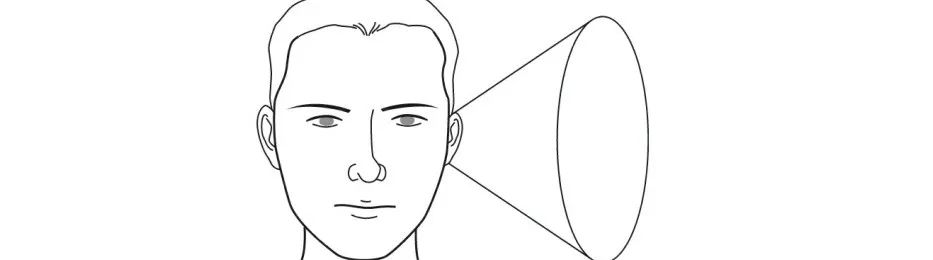

However, this positioning is ambiguous. Because sound doesn't just happen on the surface, it can happen in the front, or up and down. 45 ° sound or not, their time difference on binaural sound level is exactly the same, you will use these two information correctly, they form a "double cone". OK, we will use additional information to reduce ambiguity

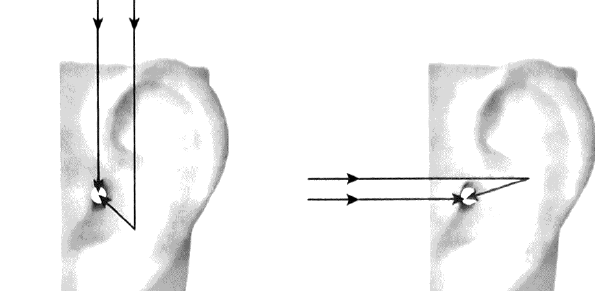

If you want to localize the sound in the vertical plane, you need to use the spectral information of the sound. The direction in which sound travels affects how it reflects in the outer ear (also known as the "ear", but the technical term for it is "the pinna"). Sounds at different frequencies are boosted or attenuated depending on the direction of the sound source. In addition, the shapes of our two ears are slightly different, so that the impact on the sound is also different, which is more conducive to judgment based on the spectral information of the sound.

The brain's main judgment is based on the binaural time difference. When other information conflicts with each other, this information is dominant. Spectral information that provides vertical plane information is inaccurate and often misleading.

It is precisely because of the ambiguity of this positioning that we turn our heads when listening to the sound. By constantly reading multiple pieces of information about the sound source, we can cover this uncertainty and establish a comprehensive and complementary evidence base to determine where the sound may come from. For example, birds keep turning their heads, sometimes the sound of insects, just like us, to reduce the uncertainty of sound position.

The more information a sound contains, the easier it is to locate. Therefore, noise containing sounds of different frequencies is easier to locate. This is the reason for adding broadband white noise to the whistle of the vehicle, rather than using a pure tone signal as in the past.

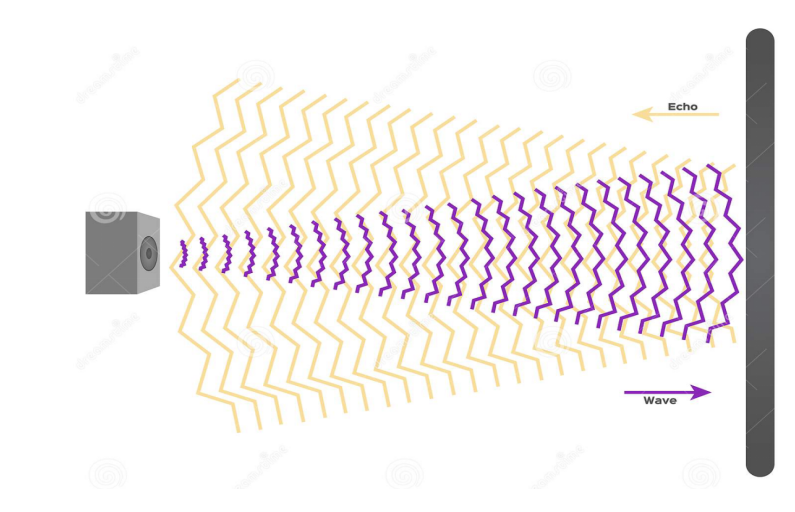

Echoes are an even more misleading factor. We can get a good sense of the complexities of sound localization by examining the way we process echoes. Most environments, including rooms, venues, valleys, and more, produce echoes.

It's hard enough to tell where a single sound is coming from, let alone distinguish between the various acoustics, reflections, and their reverbs, all coming at you from different directions. Fortunately, however, the auditory system has special mechanisms for mitigating this disturbance of positional misjudgment.

When the original sound and echo reach your ears in a very short time, the brain will combine them into a group, and only the first original sound will represent the whole group. This is easily realized in the Hass effect, also known as the priority effect.

The threshold value of time difference of sound for Haas effect is 30-50 milliseconds. If the time difference between the arrival of two voices exceeds this threshold, you will hear two voices coming from two places. This is what we usually call echo. By creating echoes and shortening the time difference from above to below the threshold, you will feel the impact of this effect.

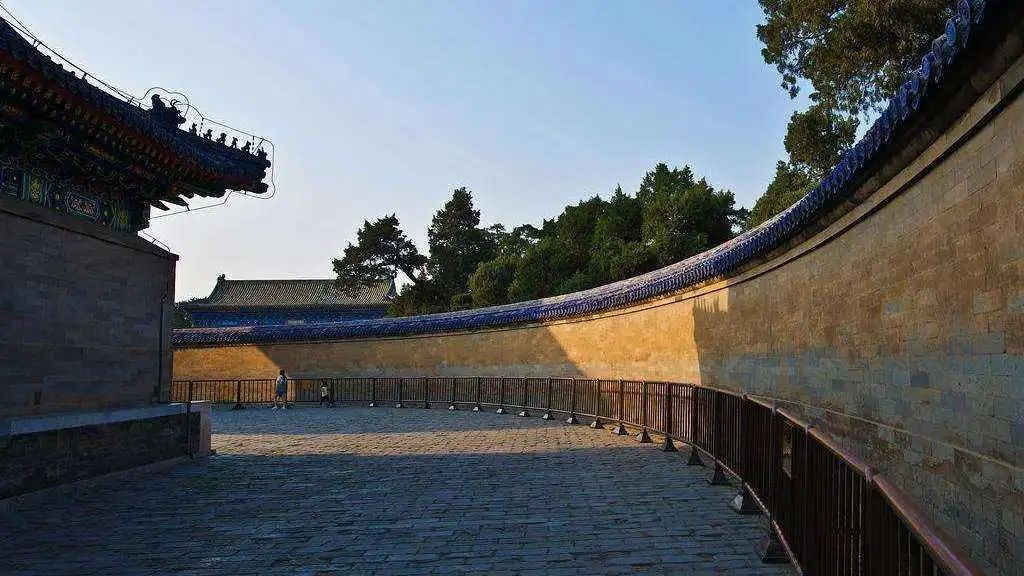

Try clapping your hands at a huge wall (such as the Echo Wall of the Temple of Heaven) to experience the Haas effect. Please stand about 10 meters away from the wall and clap your hands. At this distance, the time difference between the original sound and the echo produced by clapping hands will exceed 50 milliseconds. So, you will hear two voices.

Now go to the wall and continue to applaud. About 5 meters away from the wall - the time difference between the original sound and the echo is less than 50 milliseconds - you will no longer hear these two sounds. The original sound and echo have merged, and they appear to be a sound from the direction of the original sound. At this point, priority effect is playing a role. Of course, this is just one of many mechanisms used to help better locate sound.

In any case, hearing can quickly and roughly tell us the source of the sound, and it is enough for us to go back and deal with it.

Guangdong Nandi Yanlong Technology Co., Ltd.

Post time: Oct-22-2022